My Dell R710 saga

In early Summer 2024, personal circumstances made me decide to leave my apartment in South London. I loved many things about that apartment, including a nice little niche in the storage room beside the FTTH GPON router, where I held some computer equipment. That included an N40L I used to hold a secondary copy of my historical data. The system was running FreeBSD, equipped with 2x1TB SSDs for boot and 4x12TB spinning HDDs for the data itself.

Leaving the apartment also meant I had to say goodbye to my secondary copy. Then I remembered that I had some decommissioned hardware in my storage unit, including a pair of Dell R710 servers. I liked that those specific servers have 6 3.5" bays, which is exactly the number of bays I needed to try replacing my N40L. With a 2U form factor, I could have searched for a colocation and had my secondary copy back again. I should have been concerned about the R710 power consumption. But honestly, I wanted to avoid buying a new server at this stage.

So I went to my storage unit, and first thought: “Will the R710 boot again after almost five years in a storage unit?”

Despite my initial thoughts, it booted but had 12 failed DIMMs. Luckily, I had a twin R710 from which I took the DIMMs and it has 144GB of RAM again. (new spare DIMMs were ordered).

I tried to boot the system from one SSD. I decided not to trust the PERC H700 that I configured in RAID0. As a matter of fact, I was right and I remember correctly that those raid controllers put a configuration on the first few bytes of the disk, which corrupted the GPT table. (managed to restore the table afterwards)

I impulsively purchased an LSI 9261-8i controller on ebay, hoping that the card would support passthrough other than RAID. I was wrong. And my “hardware fairy” was right once again. So, I purchased a pre-modded 9211-8i in IT mode. It worked as a charm with the boot SSDs, so I took the courage and put all the 4x12TB HDDs in the trays.

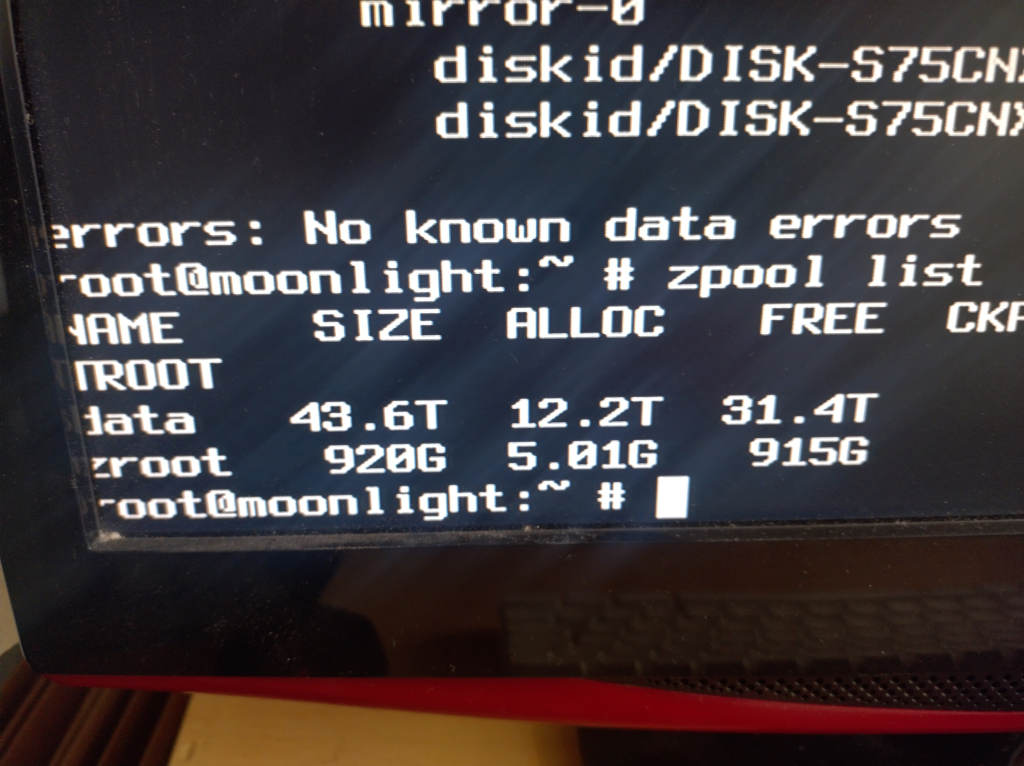

The result is the one you see in the picture. I was able to “shift and lift” the system from the HP N40L to the Dell R710. Of course, it runs FreeBSD.

The system was almost ready for colocation. I planned to install 2 x (2 x M.2 NVME) PCI adapters, so I can have a redundant L2ARC cache and a dedicated fast storage pool for VMs and Jails.

The first good news is that the R710 finally found a home in my new colocation in Milan, Italy.

Unfortunately, the Dell rails I had did not fit in the new rack, so I had to use a couple that were … ahem … borrowed from the colo provider. Apparently, the rack is somehow longer than the usual ones. From what I saw on the other racks, others have that problem too.

I installed the two PCI NVME adapters. However, I had second thoughts about L2ARC. From what I’ve read and my usage, I would not benefit from an L2ARC, and perhaps it’s better to have a good-tuned memory ARC. I also considered using either a write cache or a special (mirrored) device for ZFS metadata. But given that the spinning disks are mostly for archive/disaster recovery, I don’t think I will benefit from them either.

Instead, I installed two 1TB NVMEs in different cards configured as ZFS mirror to hold virtual machines and jails. I believe that’s plenty of space for what I need, but I left the two remaining slots to expand that pool or for future use.

I bought a Schuko PDU and a shelf to hold the router and the switch. The former is the only new equipment in the rack. Everything else is something I previously had in my storage unit. The switch is an old 8-port managed 3com, divided into 3 VLANs.

The router is the exact copy of the router I have in another location, with 4x2.5G ethernet ports running plain FreeBSD, automated through Ansible, and terminating the VPNs. In future, I would like to experiment with VXLANs and BGP… and maybe E2VPN, when support on FreeBSD will be available.

The picture shows the final installation.

It’s not much, but it’s a start. I need to start somehow, right? I hope to have made my hardware fairy proud.

So, what’s next?

I have a twin R710 that I’m refurbishing. That will mostly hold virtual machines for community projects and have different disk layouts. I also plan to buy a decent switch. I want something rack-mountable, managed with at least 16 ports and 2.5G+ speed (with 2x10G) In the future, I also want to build a machine for a private LLM, as I have a specific use case for that, but that will definitely not happen before next year.